We are all familiar with haptic output - when our mobile phones vibrate, or our game controllers rumble, we are experiencing synthetic touch-based sensations. But these are fairly crude signals, and are difficult to use with any degree of nuance. How might one author subtle haptic sensations? The answer, I supposed, might lie in sound - in the rumble of a bass speaker, or the subtle vibration of a guitar as it is being played.

In this design study conducted at Microsoft Research, I, alongside researcher David Sweeney, built a device to aid in exploring this form of haptic actuation. Using cantilevered piezoelectric elements, we created a low latency, high fidelity system for reproducing and creating tactile sensation. What’s more, we could author these haptic signals using standard audio editing software. Here are my design notes for these experiments in haptic actuation.

There are a number of ways that we might generate and control tactile sensations using materials, technologies, and artefacts. We might rely upon engagement with static materials, force feedback systems, or vibrational haptics.

Vibrational Haptic Actuators.

There are a range of actuator devices that can be used to create vibrational haptic signals. What follows is a benefit / drawback description for three commonly used technologies.

Pager Motors (Left)

‘Motors with an Unbalanced Weight’

Benefits : Low cost, low voltage, small size.

Drawbacks : Slow response (150ms), single frequency, high energy consumption, audible

Benefits : Low cost, low voltage, small size.

Drawbacks : Slow response (150ms), single frequency, high energy consumption, audible

Linear Resonance Actuators (Centre)

‘Magnets on Springs’

Benefits : Fast response (50ms), low voltage / energy consumption

Drawbacks : Single ( sine ) resonant frequency, audible

Benefits : Fast response (50ms), low voltage / energy consumption

Drawbacks : Single ( sine ) resonant frequency, audible

Piezo Elements (Right)

‘Speakers without Magnets’

Benefits : Very Fast response (15ms), inaudible under 500Hz, variable Frequency

Drawbacks : Medium energy consumption

Benefits : Very Fast response (15ms), inaudible under 500Hz, variable Frequency

Drawbacks : Medium energy consumption

Exploring Cantilevered Piezo Actuators.

We used a cantilevered piezo actuator as the starting point for a series of design explorations around the use of vibrational haptic interfaces. In order to achieve nuanced control of vibrational actuation, we first needed to implement a test circuit.

Our Control Circuit.

We chose to adapt a TI DRV 2667 controller circuit to drive the piezo element. In addition to standard electronic inputs and interfaces (I2C), it allowed for the use of line-level audio as a means of actuation.

The actuator element we employed was a Piezoelectric Parallel Bimorph Actuator, obtained from Steiner and Martins, Inc.

Housing the Actuator.

After some experimentation, we found that the strength and quality of the haptic response generated depended upon the form of its container.

We designed (using SolidWorks) and fabricated (using 3D printing and workshop techniques) a housing for the actuator. The design was carefully planned to maximise the strength and fidelity of the vibrations generated by the piezo element, whilst minimising the generation of audible sounds.

Driving the Actuator through Sound.

Once the unit was constructed and connected to the circuit we created, we were able to leverage the benefits of an audio-driven haptic actuator.

The use of audio as a source allowed us to leverage existing toolkits and technologies as a means of testing and prototyping various types of haptic response. Rather than re-inventing the wheel, we had ‘tried and true’ technologies for manipulation and sequencing of appropriate media. We made use of many public sources of audio data, conceptually repurposed as haptic content and modified accordingly.

Preliminary tools for rich haptic experiments.

At this point, we were ready to test out our preliminary rich haptic actuator system.

I was able to quickly author a browser-based haptic sequencer using simple WebAudio and JavaScript libraries. This sequencer allowed for control and authoring of audio samples and sequences. The sound samples loaded into the sequencer were selected for their haptic potential, and included various types of heartbeats, motors, pings, and pulses.

Using the Tools.

The audio signal from this sequencer was routed into the piezo-driven actuator using standard audio leads plugged into a headphones jack. The audio output was converted into haptic output.

When holding the actuator, users were able to clearly and instantly identify various forms of sound samples based purely on the haptic response generated by the sound waves... Heartbeats 'felt like' heartbeats, motors felt like motors, and so on.

A Synesthetic Approach to Haptic Design.

Our system allowed us to use metaphors from other senses to understand and discuss tactile sensations.

Our approach to exploring rich haptics was fundamentally synesthetic in nature - we adopted tools, techniques, and strategies for engagement from both visual and audio design. In additional to the technical benefits this provided, it also gave us a common and established language for clarifying details and forming ideas.

Audio Metaphors for Haptic Design.

Our discussions of layering composition, sequencing, timing, filtering and modification of assets, harmony, and the like were grounded in the technical language of audio manipulation, although the final outputs were tactile rather than sonic in nature. This was practically valuable from a practical experimental perspective, and also shaped our ideas for potential further explorations of rich haptic experiences.

Recording haptics.

How might we capture personal haptic data?

Just as a speaker is also a microphone, the piezo element driving our haptic actuator might be usable to both play and record tactile signals.

How might we capture our daily touch-centric experiences and play them back, what forms might devices created to gather such input take, and to what use might we put the gathered data?

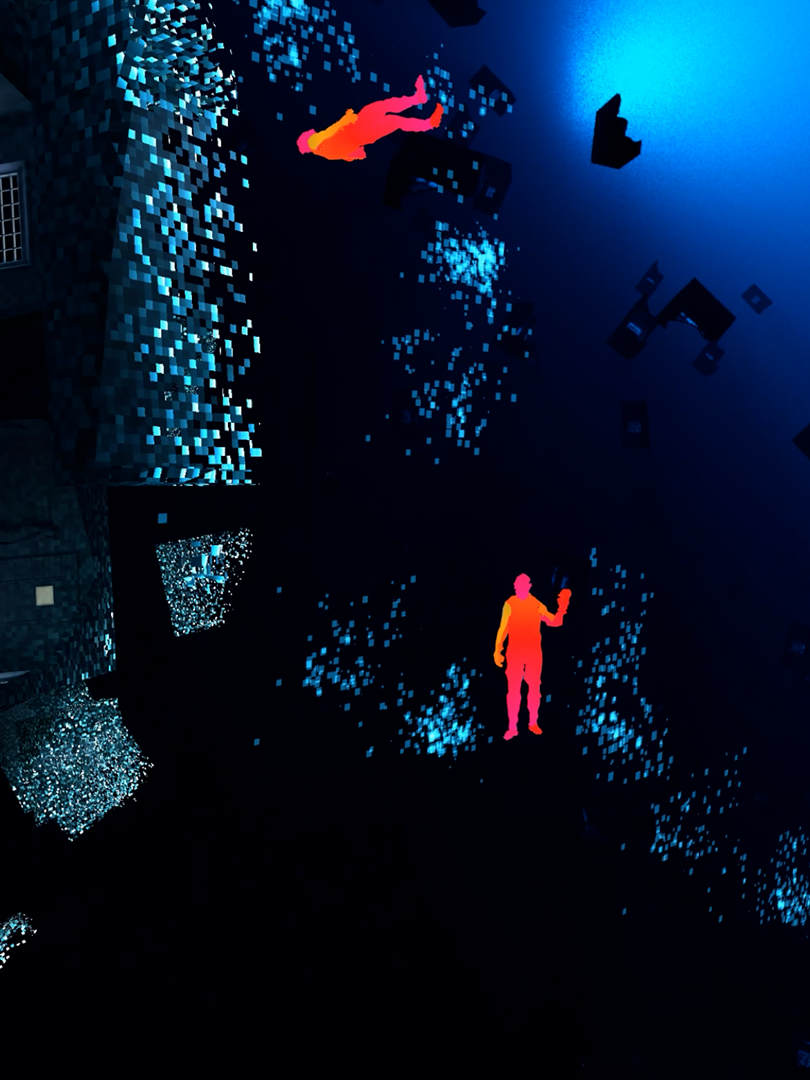

Nuanced haptics and 3D worlds.

Our experiments led us to consider how we might leverage bump maps as a means of generating tactile response. In-game bump maps provide visual representations of surface texture, for use in more realistic image rendering. We might potentially make additional use of these bump-mapped textures, as a means of generating audio signals that represent surface textures. This would allow the integration of haptic outputs in gaming, AR, and VR contexts with little additional development cost, as the underlying data we would employ is already present within virtual environments.

Avenues for Further Investigation.

Explorations of how vibrational haptics are experienced by the deaf community, automotive mechanics, machine operators, musicians, instrument makers, and other potentially knowledgable parties.

Explorations of Background Haptics vs. Foreground Haptics.

Explorations of Impulse haptics vs. Sustained haptics.

Building taxonomies of audio-haptic sensations for use in design activity.

Empirically testing the use of layered signals - how do we perceive them and how do we differentiate distinct signals in a composition formed from multiple haptic impulses?

Gaming and AR / VR Applications : how can nuanced haptic design be used to aid in immersive virtual environments, and how might the technologies involved be effectively reconciled with existing workflows and methods of virtual content creation?