PROJECT DESCRIPTION

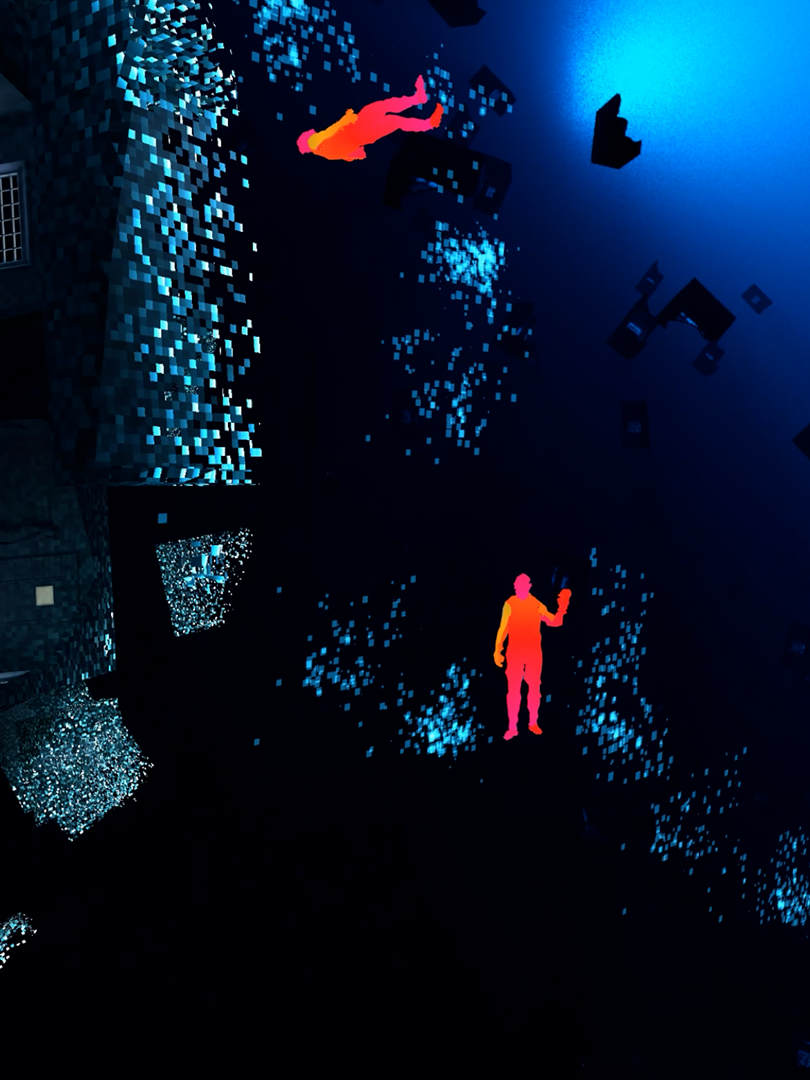

This design study, created whilst I was resident at Microsoft Research Cambridge, explored the potential uses of a then-experimental hand-tracking technology developed in the lab, which used the Kinect hardware to provide 23 tracked points of articulation per hand. Most of the hand-tracking technologies I had explored previous to this tended to have screen-based use case scenarios, and I was curious to see how this emerging technology might be used in situated environments, rather than in front of a monitor. The world, after all, involves more than just screens and displays!

MAKING THE PROJECT

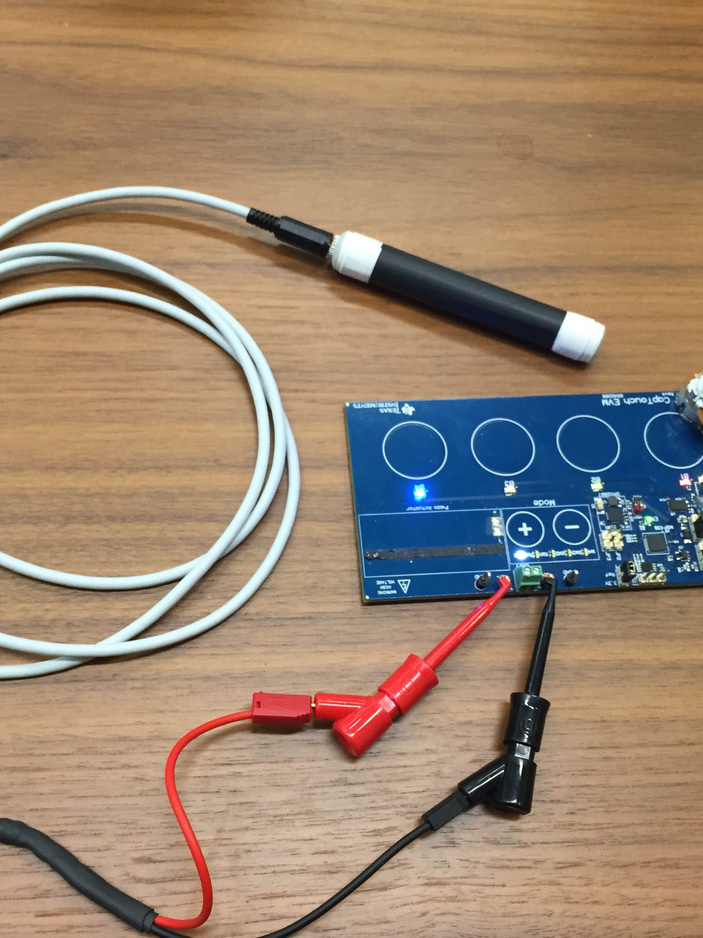

Alongside researchers Maria Kustikova and David Sweeney, I first explored the experimental hand tracking software within the Unity game development environment, integrating it with some code libraries I’d written that related to motion tracking, and testing the capabilities and limitations of the system. I then developed a number of scenarios, involving both its connection to IoT-enabled devices, and also its use in concert with non-enabled objects. This video, presented at the Microsoft’s internal 2016 Research Hackathon, documents some of these use case scenarios.