PROJECT DESCRIPTION

Lost Origin was a collaborative and multidisciplinary research endeavour, primarily backed by UK Research and Innovation’s (UKRI) Audience of the Future program, under the Industrial Strategy Challenge Fund’s initiative to innovate audience experiences. It marked the culmination of extensive research and development efforts aimed at shaping how future audiences engage with entertainment and visitor experiences. The project convened renowned storytellers, technology firms, and academics to spearhead ground-breaking immersive experiences through innovative storytelling techniques.

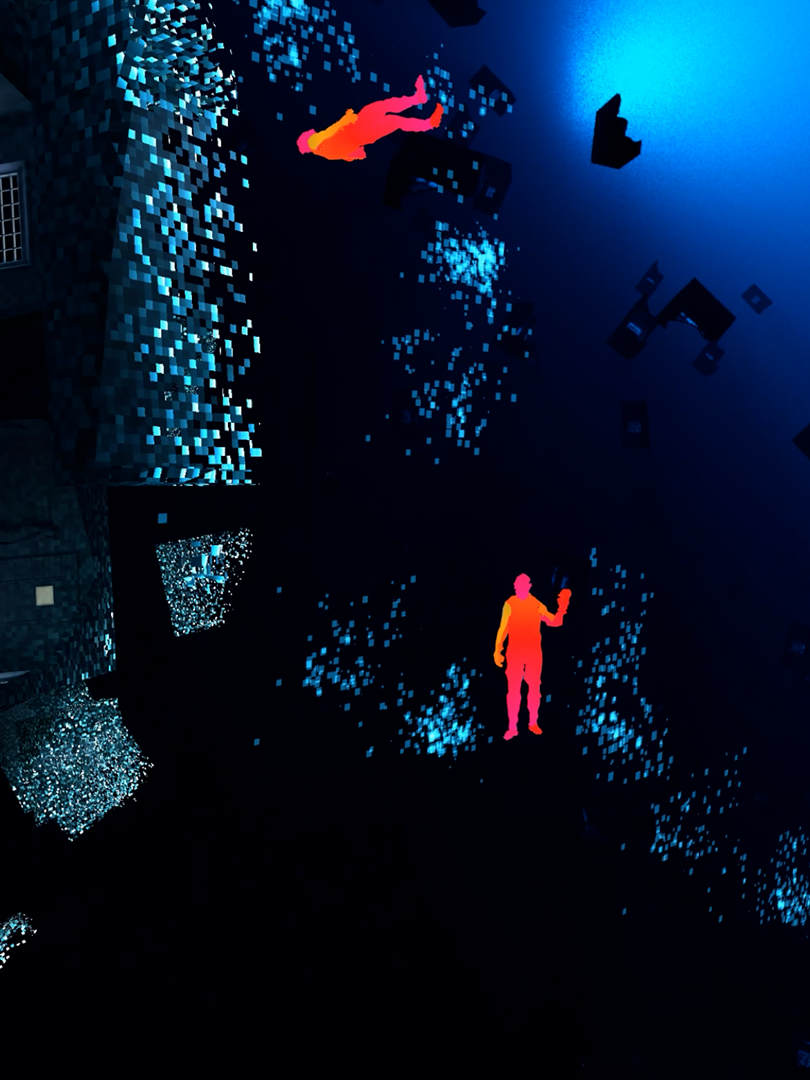

In Lost Origin, participant audience members were recruited into Wing 7, a covert undercover unit tasked with infiltrating Origin, a suspected front for an illicit dark web marketplace hidden within a warehouse in Hoxton. Across the immersive adventure, audiences navigated intricately designed and interactive environments crafted using cutting-edge technology, immersing them in the narrative journey and evoking a profound sense of wonder and engagement.

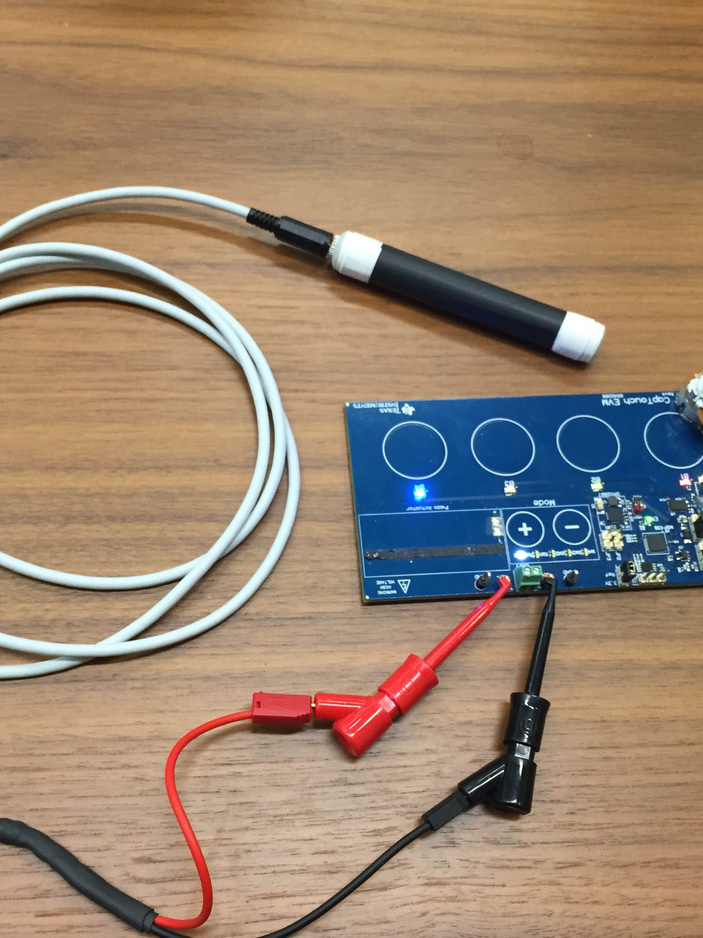

Lost Origin delved into unprecedented methods of leveraging the dynamic interplay between physical and digital realms. Through the utilization of computer vision, light field scanning, and gesture recognition technologies, participants equipped with Magic Leap headsets experienced augmented realities from diverse perspectives, enabling individual exploration within a cohesive team dynamic. Interactive projections integrated state-of-the-art Intel RealSense depth-sensing hardware, AI-driven human segmentation, and NuiTrack pose detection software, complemented by in-house systems to synchronize physical actions with the augmented reality narrative.

MY ROLE IN THE PROJECT

My main responsibility in the Lost Origin project involved the design and development of a live, gesture-based immersive projection system, capable to tracking up to 10 users in 3D simultaneously. I achieved this using an array of Intel RealSense 3D cameras, NuiTrack AI-based skeleton tracking, and custom-written gesture analysis code. This system also included multiple redundancies for 100% reliability in love event contexts, and UDP-based network control capabilities for easy integration with show control systems.

Projection mapped visuals were crafted using handcrafted assets integrated with the Unity Visual Effects Graph. In addition, I created process-specific multiuser VR tools for exploring and previsualising aspects of the projection mapped space offsite - a vital feature for this project, as production was completely remote due to the covid lockdown.

I also developed the gestural interaction system used by the Magic Leap sequence of the application, and worked to ensure precise alignment between the AR headsets and the physical set build.